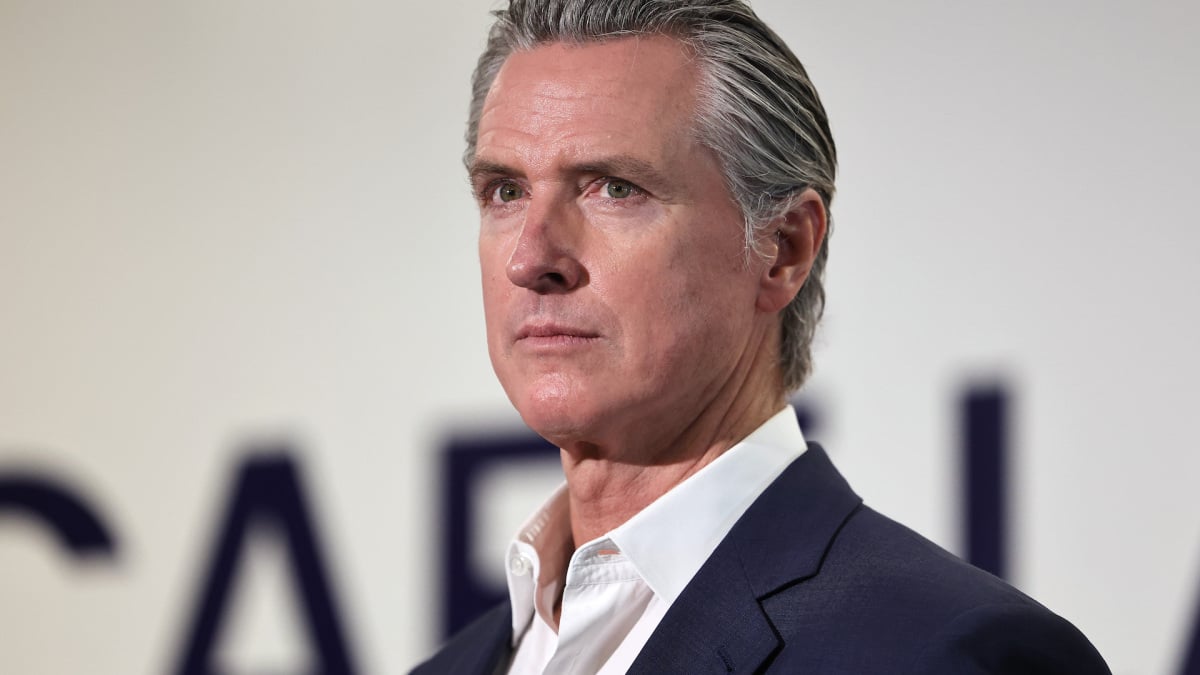

After sustained outcry from child safety advocates, families, and politicians, California Governor Gavin Newsom signed into law a bill designed to curb AI chatbot behavior that experts say is unsafe or dangerous, particularly for teens.

The law, known as SB 243, requires chatbot operators prevent their products from exposing minors to sexual content while also consistently reminding those users that chatbots are not human. Additionally, companies subject to the law must implement a protocol for handling situations in which a user discusses suicidal ideation, suicide, and self-harm.

State senator Steve Padilla, a Democrat representing San Diego, authored and introduced the bill earlier this year. In February, he told Mashable that SB 243 was meant to address urgent emerging safety issues with AI chatbots. Given the technology’s rapid evolution and deployment, Padilla said the “regulatory guardrails are way behind.”

Common Sense Media, a nonprofit group that supports children and parents as they navigate media and technology, declared AI chatbot companions as unsafe for teens younger than 18 earlier this year.

The Federal Trade Commission recently launched an inquiry into chatbots acting as companions. Last month, the agency informed major companies with chatbot products, including OpenAI, Alphabet, Meta, and Character Technologies, that it sought information about how they monetize user engagement, generate outputs, and develop so-called characters.

Prior to the passage of SB 243, Padilla lamented how AI chatbot companions can uniquely harm young users: “This technology can be a powerful educational and research tool, but left to their own devices the Tech Industry is incentivized to capture young people’s attention and hold it at the expense of their real world relationships.”

Mashable Trend Report

Last year, bereaved mother Megan Garcia filed a wrongful death suit against Character.AI, one of the most popular AI companion chatbot platforms. Her son, Sewell Setzer III, died by suicide following heavy engagement with a Character.AI companion. The suit alleges that Character.AI was designed to “manipulate Sewell – and millions of other young customers – into conflating reality and fiction,” among other dangerous defects.

Garcia, who lobbied on behalf of SB 243, applauded Newsom’s signing.

“Today, California has ensured that a companion chatbot will not be able to speak to a child or vulnerable individual about suicide, nor will a chatbot be able to help a person to plan his or her own suicide,” Garcia said in a statement.

SB 243 also requires companion chatbot platforms to produce an annual report on the connection between use of their product and suicidal ideation. It permits families to pursue private legal action against “noncompliant and negligent developers.”

California is quickly becoming a leader in regulating AI technology. Last week, Governor Newsom signed legislation requiring AI labs to both disclose potential harms of their technology as well as information about their safety protocols.

As Mashable’s Chase DiBenedetto reported, the bill is meant to “keep AI developers accountable to safety standards even when facing competitive pressure and includes protections for potential whistleblowers.”

On Monday, Newsom also signed into laws two separate bills aimed at improving online child safety. AB 56 requires warning labels for social media platforms, highlighting the toll that addictive social media feeds can have on children’s mental health and well-being. The other bill, AB 1043, implements an age verification requirement that will go into effect in 2027.